Cyber Insight

A pillar that emphasizes the ethical, targeted, and

measured use of AI to strengthen human values

and capabilities, not replace them.

Overview

Cyber Insight is a pillar that focuses on the ethical, targeted, and auditable use of AI (Artificial Intelligence) technology to strengthen human values and capabilities, not replace them. This pillar is important because AI can be a strategic force that improves efficiency, prediction, and security, but it can also be a source of risk if used without control, transparency, and preparedness.

This pillar regulates how organizations prepare themselves before adopting AI, ensuring that AI data, models, and processes comply with the principles of explainability, accountability, and trustless verification. With Cyber Insight, organizations can build an auditable and fair AI ecosystem, while mitigating potential threats from the inappropriate use of AI.

Furthermore, AI is key to modern cyber security, whether for threat detection, risk analysis, or automated response. However, AI can also be a tool for attack if not managed properly. Therefore, Cyber Insight ensures that AI is placed in its proper role, helping humans make smarter, evidence-based decisions and maintaining the integrity of digital systems.

Our Products That Support These Pillars

Key Component

AI Governance & SOP

Providing standard policies and procedures (SOPs) on how AI may be used, the limitations of its use, and the role of AI in supporting humans, not replacing them.

AI Model Trustworthiness

Determining the criteria for a trustworthy AI model, including quality validation, accuracy, and bias risk of the model used.

Training Data Integrity & Compliance

Manage the quality, source, and legality of AI training data to avoid copyright infringement, privacy violations, or bias. Data must be clean, representative, and compliant with regulations (e.g., PDP Law).

Intellectual Property (IP) Control

Establish ownership rights over the work of AI, including works, predictions, or decisions generated by AI, so that ownership is clear and does not cause disputes.

Explainable AI (XAI)

Ensuring that AI decisions or outputs can be explained transparently, so that humans can understand the logic behind the predictions or recommendations made.

AI Ethics & Fairness

Establishing ethical principles in the development and application of AI, including fairness, non-discrimination, and accountability in every use of AI.

AI Security & Risk Mitigation

Anticipating AI threats (such as adversarial attacks or misuse of AI by malicious parties), and ensuring that AI functions safely within the digital ecosystem.

Human-in-the-Loop (HITL)

Maintaining human involvement in AI-based decision-making processes, ensuring that AI is only a supporting tool, not the main decision-maker.

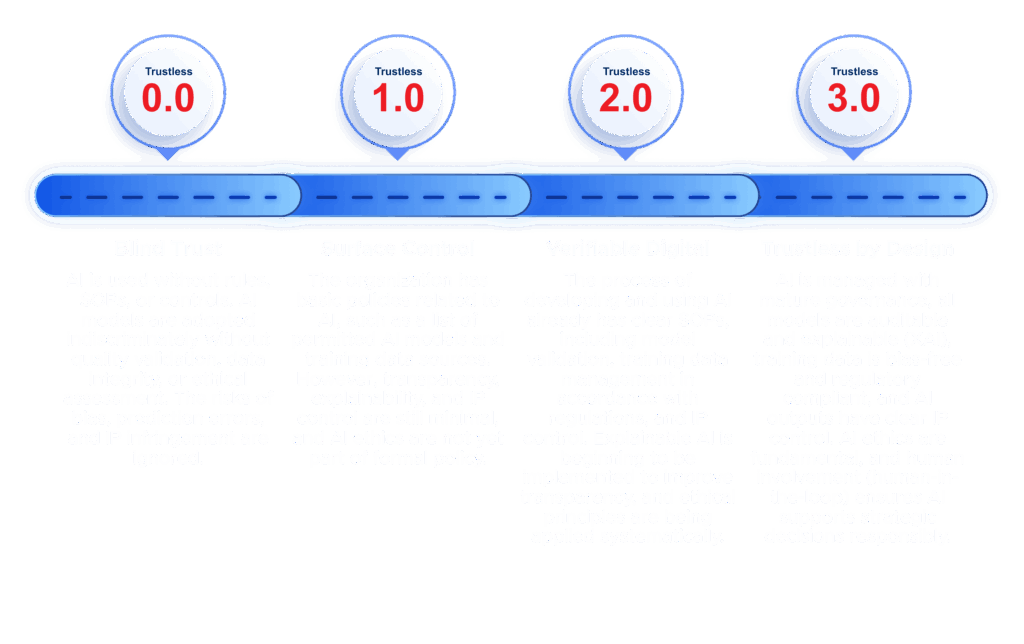

Cyber Insight Trustless Roadmap

Cyber Insight Implementation Program

CIIP is Baliola’s strategic program to help organizations design, manage, and implement AI in an ethical, transparent, and auditable manner. This program ensures that AI is placed in its proper role, with clear SOPs, IP controls, and compliance with digital regulations and ethics.

Assessment – AI Readiness Assessment

- AI Governance Assessment: Assess existing AI policies, SOPs, and governance, including controls over training data and AI models used.

- AI Trustworthiness Scan: Identify AI models that are prone to bias, are black box, or risk violating ethics and regulations.

- IP Risk & Compliance Check: Auditing ownership of AI outputs (works, predictions, decisions) and the legality of training data (GDPR, PDP Law).

- Ethical & Explainability Baseline: Assessing the extent to which AI already has transparency, fairness, and accountability.

Consulting – Designing Governance & Framework

- AI Governance Blueprint: Development of AI policies, SOP standards, and ethical guidelines (AI Ethics Policy).

- Data & Model Review: Assistance in selecting or building trustworthy AI models (explainable & verifiable).

- IP Control Framework: Development of mechanisms for ownership of AI works and outputs, as well as prevention of copyright infringement.

- AI Explainability & Fairness Workshop: Educating technical and management teams on the importance of XAI and bias prevention.

Deployment – Trustless AI Implementation & Integration

- TraceTrust for AI: Immutable recording of AI activities (model updates, training data usage) on the blockchain for auditing purposes.

- MAC Fabric Integration: Trustless recording infrastructure for AI models, enabling third-party verification of AI results.

- AI Ethics Compliance Setup: Implementation of AI ethics and governance policy controls into business processes.

- Explainability Tools Deployment: Integration of tools that can explain AI results (XAI dashboards).

Re-Assessment – Monitoring & Continuous Improvement

- Periodic AI Governance Review: Regular audits to evaluate AI models, training data quality, and ethical compliance.

- Bias & Risk Mitigation Updates: Identification of new biases and ongoing model adjustments.

- AI Accountability Report: Verifiable transparency report on AI usage.

- Training & Awareness Refresh: Team education on AI trends, ethics, and emerging risks.

Reference

EU AI Act (2024) – Regulasi Uni Eropa mengenai pengembangan dan penerapan AI yang aman, etis, dan dapat diaudit.

OECD AI Principles – Pedoman global tentang AI yang transparan, adil, dan akuntabel.

ISO/IEC 42001 – Artificial Intelligence Management System (AIMS) untuk tata kelola AI.

ISO/IEC 23894 – Risk Management for AI, memberikan panduan mengelola risiko dari sistem AI.

ISO/IEC 38507 – Governance of IT for AI, menekankan tanggung jawab dan transparansi dalam penggunaan AI.

NIST AI Risk Management Framework (AI RMF 1.0) – Kerangka kerja mitigasi risiko AI, termasuk aspek fairness, transparency, dan privacy.

NIST SP 1270 – Towards a Standard for Explainable AI (XAI).

IEEE 7000 Series – Standar etika AI, termasuk bias, keamanan, dan transparansi model.

See Other Pillars of Cyber Trust

Cyber Privacy

Ensuring individuals have ownership and control over their digital identities and data, with privacy by design

Cyber Integrity

Guaranteeing the authenticity and immutability of data and decisions through verifiable audit trails

Cyber Resilience

Building systems that can withstand and recover from attacks without compromising core functionality